Istio used as an API Gateway

Overview

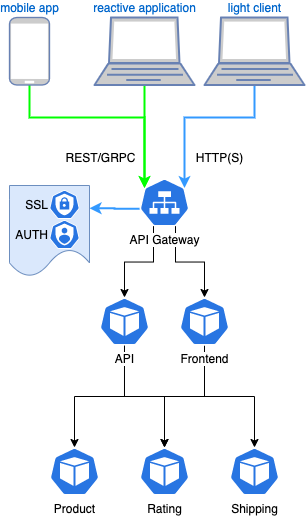

An API gateway is a core component of an API management solution. It acts as the single entryway into a system allowing multiple APIs or micro-services to act cohesively and provide a uniform experience to the user. The most important role the API gateway plays is ensuring reliable processing of every API call. In addition, the API gateway enforce enterprise-grade security with a central management plane.

Modern applications tend to display lots of different information, sometimes on the same displayed page. For example, shopping applications usually show the number of items in the shopping cart, customer reviews, low inventory warning or shipping options.

In a Micro-service world, this information can come from different components. While the application could call each service individually, most of time it's a not a good idea. Doing so leads to an excessive number of DNS requests, TCP connections creation, TLS negotiations and so on. This breaks all the browser or HTTP/2 optimizations. By using a single service endpoint, connections can be pooled and requests can be multiplexed and single SSL Certificate can be used, offering a better user experience.

The Goal of the API Gateway is to provide a central entry point to route the requests to each back-end micro-service.

Read more about API Gateways at https://microservices.io/patterns/apigateway.html

Istio already includes an Ingress Gateway component which can be configured as an API Gateway: the Istio Ingress Gateway.

Base scenario

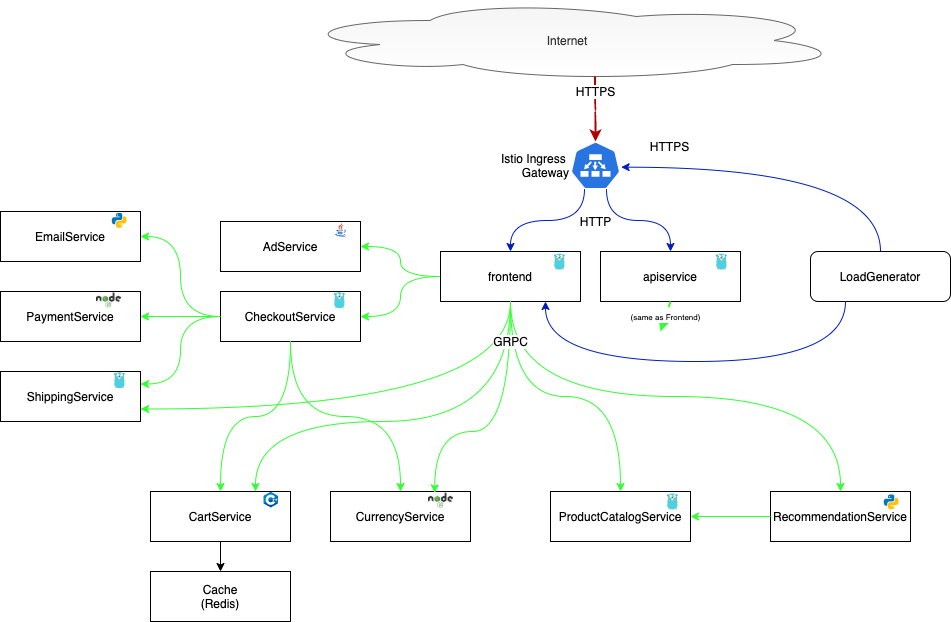

We are going to use the Hipster shop application

to demonstrate the use of Istio Ingress Gateway as an API Gateway.

The Frontend micro-service is responsible for generating the website HTML code

for browsers. On the other hand, the APIService micro-service only outputs

JSON data that is supposed to be used by a mobile application or a reactive

website that will render the HTML from the provided JSON data. While this seems

simplistic, it's a common pattern for modern websites.

We will then configure the API Gateway (Istio Ingress Gateway) to federate the two services behind a single URL. Then we will add SSL.

Prerequisites

Before you begin, ensure you have the required things setup:

- Kubernetes cluster with version > 1.15.

- Installed Istio by following the instructions in the Installation guide.

- Installed the Ingress Gateway resource.

- Enable Automatic Sidecar Injection.

Setup the Hipster shop demo Application

# create the Namespace

kubectl create namespace hipstershop

# enable automatic sidecar injection

kubectl label namespace hipstershop istio-injection=enabled --overwrite=true

The Hipster shop application is templated using Tanka, a

templating application made specifically for Kubernetes and using Jsonnet as

the templating engine.

Our repository includes a script to install the Tanka binary, tk. You can also

install it on your own by following the Tanka doc.

# clone the repository

cd /tmp

git clone https://github.com/tetratelabs/microservices-demo.git

cd microservices-demo

# install tanka

./tanka/install-tanka.sh

This will install Tanka for Linux in /usr/local/bin/tk. You can override the

target by setting a DEST variable. You can also change the binary format to

suite your computer needs by setting the OS and ARCH variables. Ex for MacOS:

OS=darwin DEST=/tmp ./tanka/install-tanka.sh

For now on we consider the tk binary to be set in your $PATH.

We can now generate the YAML templates and deploy the Hipster shop application:

tk show tanka/environments/manual --dangerous-allow-redirect \

-e manualConfig="{

project: \"hipstershop\",

namespace: \"hipstershop\",

image+: {

repo: \"prune\",

},

loadgenerator: {

deployments: [],

},

}" \

-e selectedApps='[]' > /tmp/hipstershop.yaml

kubectl apply -f /tmp/hipstershop.yaml

Once deployed, you can get the resource list:

kubectl -n hipstershop get pods

NAME READY STATUS RESTARTS AGE

adservice-68cb46dc65-j5r9x 2/2 Running 0 83s

apiservice-686c6d7bcb-9kmkk 2/2 Running 0 83s

cartservice-569dc4ff6f-mw8nm 2/2 Running 1 82s

checkoutservice-54b459c8c6-d8dgq 2/2 Running 0 82s

currencyservice-767d57bb6c-v57rz 2/2 Running 0 82s

emailservice-8f58d8d75-k5ghr 2/2 Running 0 82s

frontend-6f66d588c8-7wz5f 2/2 Running 0 82s

paymentservice-79ccc544c7-r6jdj 2/2 Running 0 81s

productcatalogservice-db9f7df84-m2g6k 1/1 Running 0 81s

recommendationservice-7d4c9bf6-4rzpv 2/2 Running 0 81s

redis-cart-7c95bcd96d-5l259 2/2 Running 0 81s

shippingservice-7f55bd5567-lmdpf 2/2 Running 0 80s

kubectl -n hipstershop get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

adservice ClusterIP 10.51.252.90 <none> 9555/TCP 5d21h

apiservice ClusterIP 10.51.248.49 <none> 8080/TCP 25h

cartservice ClusterIP 10.51.240.119 <none> 7070/TCP 5d21h

checkoutservice ClusterIP 10.51.254.230 <none> 5050/TCP 5d21h

currencyservice ClusterIP 10.51.250.203 <none> 7000/TCP 5d21h

emailservice ClusterIP 10.51.254.242 <none> 8080/TCP 5d21h

frontend ClusterIP 10.51.253.210 <none> 8080/TCP 5d21h

paymentservice ClusterIP 10.51.247.196 <none> 50051/TCP 5d21h

productcatalogservice ClusterIP 10.51.249.17 <none> 3550/TCP 5d21h

recommendationservice ClusterIP 10.51.243.135 <none> 8080/TCP 5d21h

redis-cart ClusterIP 10.51.240.128 <none> 6379/TCP 5d21h

shippingservice ClusterIP 10.51.255.125 <none> 50051/TCP 5d21h

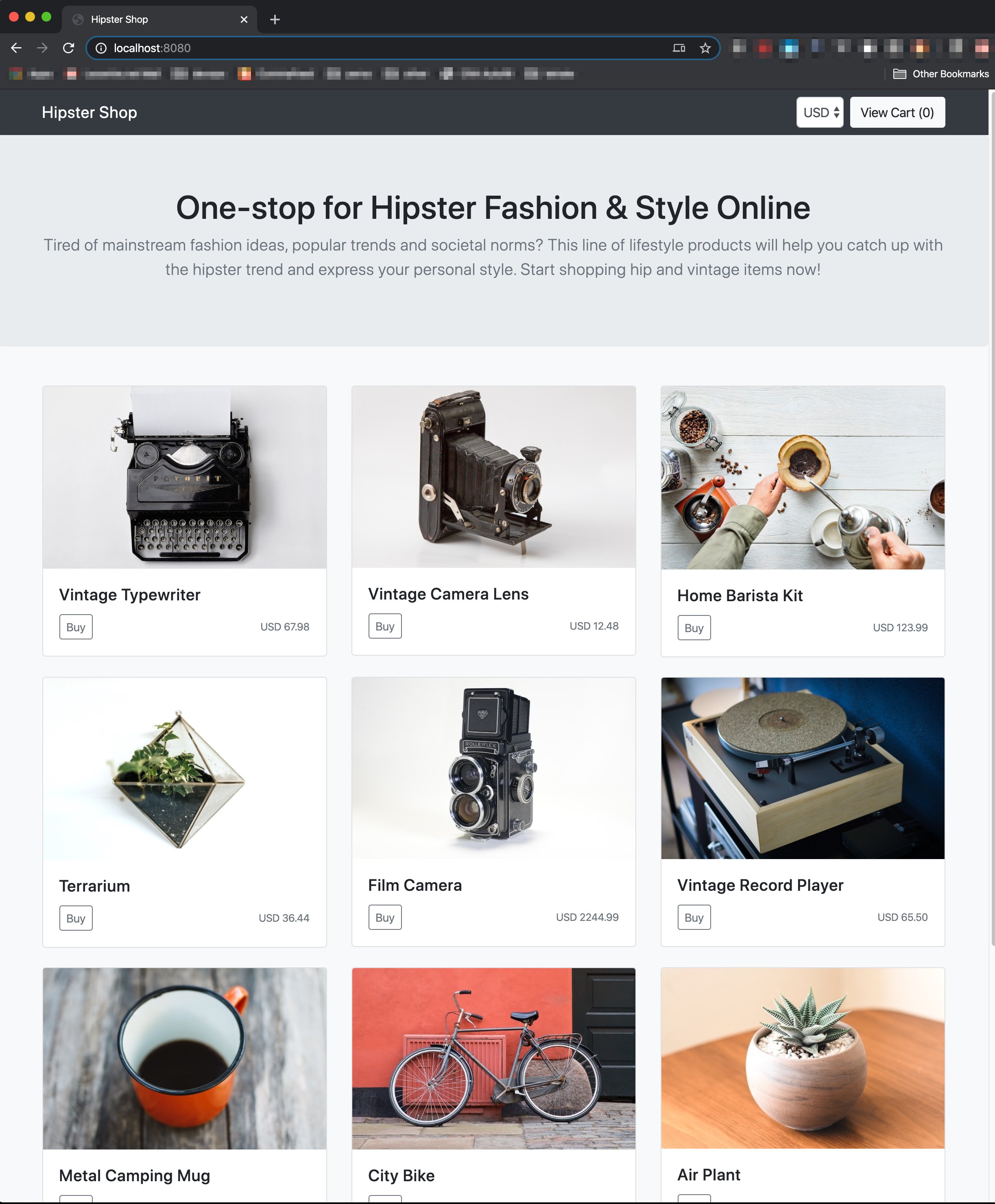

You can test your application by using a port-forward from your local computer

to the frontend pod:

kubectl -n hipstershop port-forward deployment/frontend 8080

Then open your browser to http://localhost:8080/

Configure the Ingress Gateway

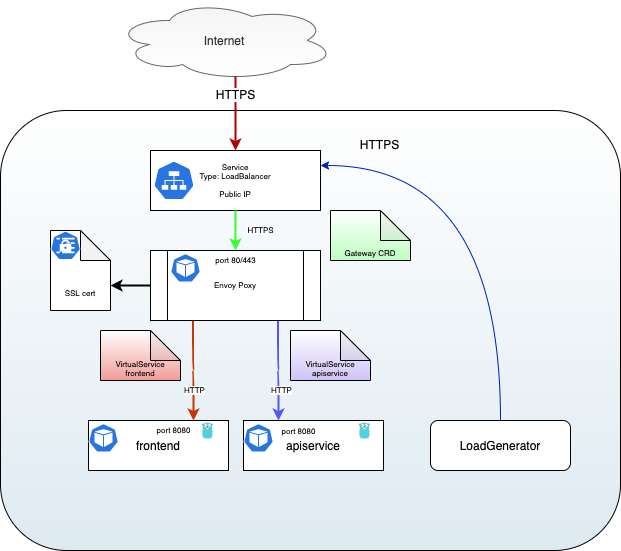

Now you have working applications, we need to setup the Istio Ingress Gateway so it will accept traffic and send it to the right micro-service.

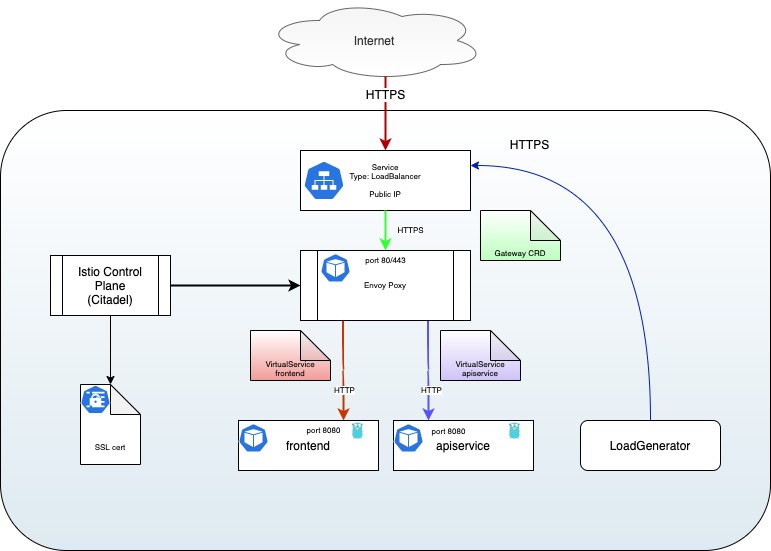

The Istio Ingress Gateway is an Envoy proxy deployed in your istio-system namespace. It uses a Kubernetes Service of Type: LoadBalancer , which attache a public IP to it so it is reachable from the Internet.

The Istio Ingress Gateway was already deployed when you setup Istio (see Prerequisites)

In a Production environment you may decide to deploy a specific Ingress Gateway in another namespace. This can be done if you need to enforce the authorization level of different teams working on the same Kubernetes cluster, or to fully segregate traffic between namespaces. Please refer to the Ingress Gateway docs for more insight.

To be able to test the service with a working host name, we will use the service from nip.io. This service is a simple way to resolve any host name to a specific IP address.

We need the public IP of the Istio Ingress Gateway:

GATEWAY_IP=$(kubectl get svc istio-ingressgateway -n istio-system -o jsonpath="{.status.loadBalancer.ingress[0].ip}")

echo $GATEWAY_IP

You can validate that the host name is valid by using nslookup or dig. Both

results should point to the Gateway IP:

dig hipstershop.${GATEWAY_IP}.nip.io

nslookup hipstershop.${GATEWAY_IP}.nip.io

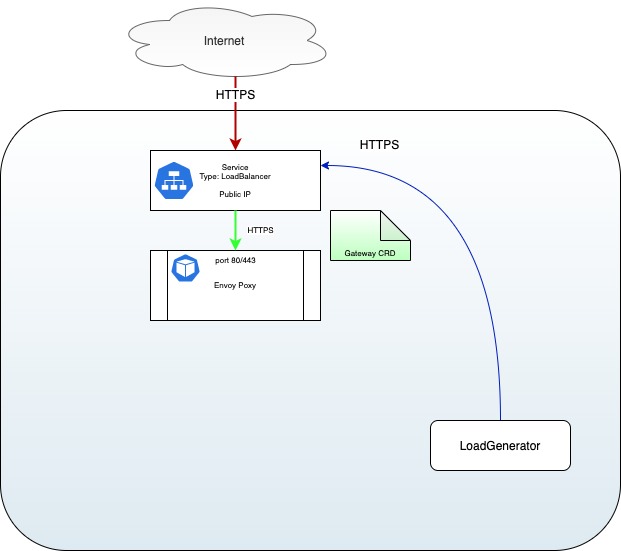

Gateway

Now that the Ingress Gateway pod/service is created, we can configure it by

creating a Gateway Custom Resource

that will tell Istio to listen on a specific host name and port. We are using a

single Gateway for both product and rating as we want only one URL to

reach both.

kubectl apply -n hipstershop -f - <<EOF

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: hipstershop-gateway

spec:

selector:

istio: ingressgateway # use Istio default gateway implementation

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "hipstershop.${GATEWAY_IP}.nip.io"

EOF

Here is what we have so far:

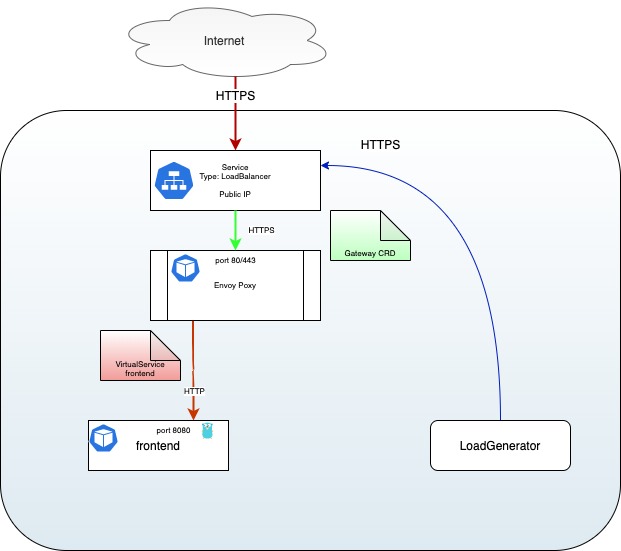

VirtualService

Now that we have a Gateway Custom Resource we can create the two

VirtualServices that will split and route the traffic to our two applications.

For the frontend service:

kubectl apply -n hipstershop -f - <<EOF

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: hipstershop-frontend

spec:

hosts:

- "hipstershop.${GATEWAY_IP}.nip.io"

gateways:

- hipstershop-gateway

http:

- route:

- destination:

port:

number: 8080

host: frontend

EOF

At this point you should be able to access the Hipster shop store at http://hipstershop.${GATEWAY_IP}.nip.io/

But the API should not be reachable:

curl -kvs http://hipstershop.${GATEWAY_IP}.nip.io/api/v1

...

< HTTP/1.1 404 Not Found

...

This is because we are routing all the traffic to the frontend service:

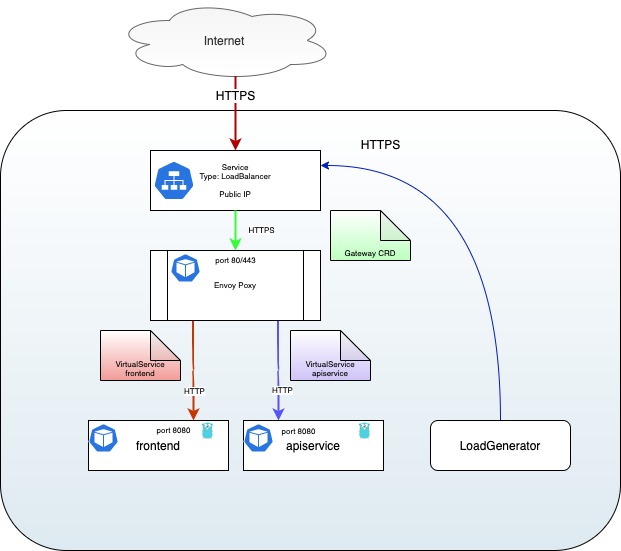

Let's add the VirtualService for the API:

kubectl apply -n hipstershop -f - <<EOF

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: hipstershop-apiservice

spec:

hosts:

- "hipstershop.${GATEWAY_IP}.nip.io"

gateways:

- hipstershop-gateway

http:

- match:

- uri:

prefix: /api

route:

- destination:

port:

number: 8080

host: apiservice

EOF

We can now query both the frontend service and the API:

curl -ks http://hipstershop.${GATEWAY_IP}.nip.io/ -H "accept: application/json"

... lots of HTML

curl -ks http://hipstershop.${GATEWAY_IP}.nip.io/api/v1 -H "accept: application/json"

{"ad":{"redirect_url":"/product/0PUK6V6EV0","te ......

lots of JSON...

}

Our situation is now as follows:

Takeout

We created a Gateway Custom Resource to listen for external traffic on the

Gateway. Then we created the two VirtualServices, each one matching a single

URL and sending the request to its specific back-end. For frontend, we match

the URL prefix / while the API match any URL starting with /api.

All this was achieved by using the power of Istio's Routing and Traffic Shifting API. You can read more about them in the Istio Documentation.

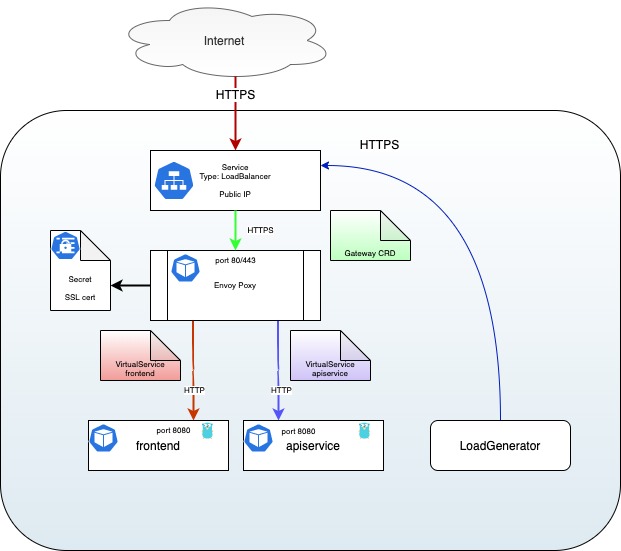

Add SSL

Thanks to the Istio Ingress Gateway we can easily add an SSL certificate to

support HTTPS requests. Istio Ingress Gateway can use SSL Certificates from

Kubernetes Secrets

or through a discovery service known as SDS.

SSL Certificates have an expiration date set at creation time. When expired,

clients connection to your website will either fail or display an error message.

You have to take tare of renewing your certificates and rotate them so your

Gateway is always presenting a valid certificate.

By default, the Istio Ingress Gateway only read the secret containing your SSL

Certificate once when it starts.

If you decide to go this way, you will have to restart your

Istio Ingress Gateway after updating your Secret.

When using the Secret Discovery Service (SDS), the Secret is monitored by

Citadel, an Istio component, and is transferred to the Istio Ingress Gateway

when it changed. This is a preferable solution when configuring a Production

Environment. It also enable the use SSL Certificate management tools like

CertManager.

Refer to the linked documentation for more details.

Generate a Self Signed Certificate

First we have to create a Root Certificate and Key:

openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 \

-subj '/O=hipstershop Inc./CN=hipstershop.com' -keyout hipstershop-ca.key \

-out hipstershop-ca.crt

Then we create the SSL Certificate:

openssl req -out hipstershop.csr -newkey rsa:2048 -nodes -keyout hipstershop.key \

-subj "/CN=hipstershop.${GATEWAY_IP}.nip.io/O=hipstershop organization"

openssl x509 -req -days 365 -CA hipstershop-ca.crt -CAkey hipstershop-ca.key \

-set_serial 0 -in hipstershop.csr -out hipstershop.crt

SSL with Certificate from a Kubernetes Secret

We can now copy the SSL Certificate inside a Kubernetes secret. The Secret

has to be created in the same Namespace as the Istio Ingress Gateway. In our

case, in the default Istio Namespace : istio-system:

kubectl create -n istio-system secret tls istio-ingressgateway-certs \

--key hipstershop.key --cert hipstershop.crt

We can now modify the gateway to also accept HTTPS traffic on port 443:

kubectl apply -n hipstershop -f - <<EOF

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: hipstershop-gateway

spec:

selector:

istio: ingressgateway # use Istio default gateway implementation

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "hipstershop.${GATEWAY_IP}.nip.io"

- port:

number: 443

name: https

protocol: HTTPS

tls:

mode: SIMPLE

serverCertificate: /etc/istio/ingressgateway-certs/tls.crt

privateKey: /etc/istio/ingressgateway-certs/tls.key

hosts:

- "hipstershop.${GATEWAY_IP}.nip.io"

EOF

The result is as follows:

Then, query the application using HTTPS protocol:

curl -ks https://hipstershop.${GATEWAY_IP}.nip.io/api/v1/product/66VCHSJNUP \

-H "accept: application/json"

{"Item":{"id":"66VCHSJNUP","name":"Vintage Camera Lens","description":"You won't have a camera to use it and it probably doesn't work anyway.","picture":"/static/img/products/camera-lens.jpg","price_usd":{"currency_code":"USD","units":12,"nanos":490000000},"categories":["photography","vintage"]},"Price":{"currency_code":"USD","units":12,"nanos":489999999}}%

SSL with SDS

To be able to use SDS for the Istio Ingress Gateway, you have to install

Istio with the --set values.gateways.istio-ingressgateway.sds.enabled=true

option. Please refer to this doc to ensure you deployed Istio as needed.

We will re-use the SSL certificates we built before, but instead of adding them

into the Istio Ingress Gateway Secret, we will put them in our own

hipstershop secret.

When using SDS, the SSL certificate is provided from Citadel, the "security" module of Istio, directly to the Envoy proxy (sidecar or ingress gateway). Citadel can take care of monitoring the Secrets holding the certificates and pushing updates to the Envoy proxies when needed.

Let's create a new secret for our application:

kubectl create -n istio-system secret generic hipstershop-credential \

--from-file=key=hipstershop.key \

--from-file=cert=hipstershop.crt

We can now modify the gateway to also accept HTTPS traffic on port 443 using SDS:

kubectl apply -n hipstershop -f - <<EOF

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: hipstershop-gateway

spec:

selector:

istio: ingressgateway # use Istio default gateway implementation

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "hipstershop.${GATEWAY_IP}.nip.io"

- port:

number: 443

name: https

protocol: HTTPS

tls:

mode: SIMPLE

credentialName: "hipstershop-credential"

hosts:

- "hipstershop.${GATEWAY_IP}.nip.io"

EOF

Here, SDS is activated by using the credentialName key to reference the name of the Secret:

We can now query using HTTPS protocol:

curl -ks https://hipstershop.${GATEWAY_IP}.nip.io/api/v1/product/66VCHSJNUP \

-H "accept: application/json"

{"Item":{"id":"66VCHSJNUP","name":"Vintage Camera Lens","description":"You won't have a camera to use it and it probably doesn't work anyway.","picture":"/static/img/products/camera-lens.jpg","price_usd":{"currency_code":"USD","units":12,"nanos":490000000},"categories":["photography","vintage"]},"Price":{"currency_code":"USD","units":12,"nanos":489999999}}%

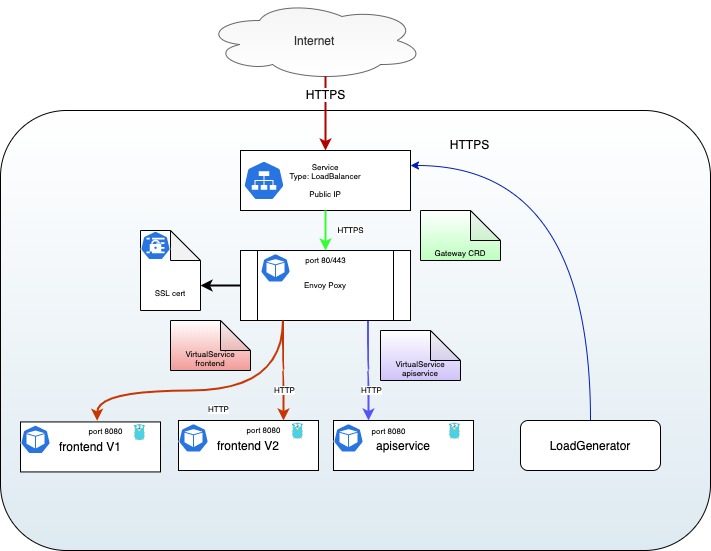

Using the API Gateway for canary deployment

Canary Deployment is the practice of having two different versions of the same application running at the same time and controlling the flow between them. It usually translates to having 100% of traffic to the old V1 application, deploy the new V2 application without traffic, then switch some traffic (like 1%) to it and monitor its behavior. After some time and if everything is fine, increase the traffic to 10%, then 20%.. etc, until V2 have 100% traffic and you can remove V1.

By using Istio Ingress Gateway you can do even more, as you you can use all

the power of VirtualServices to, for example, redirect old clients to the V1

while every new client with the latest mobile application could reach the V2

back-end, configure CircuitBreakers

or add some delay in response

to better test you services.

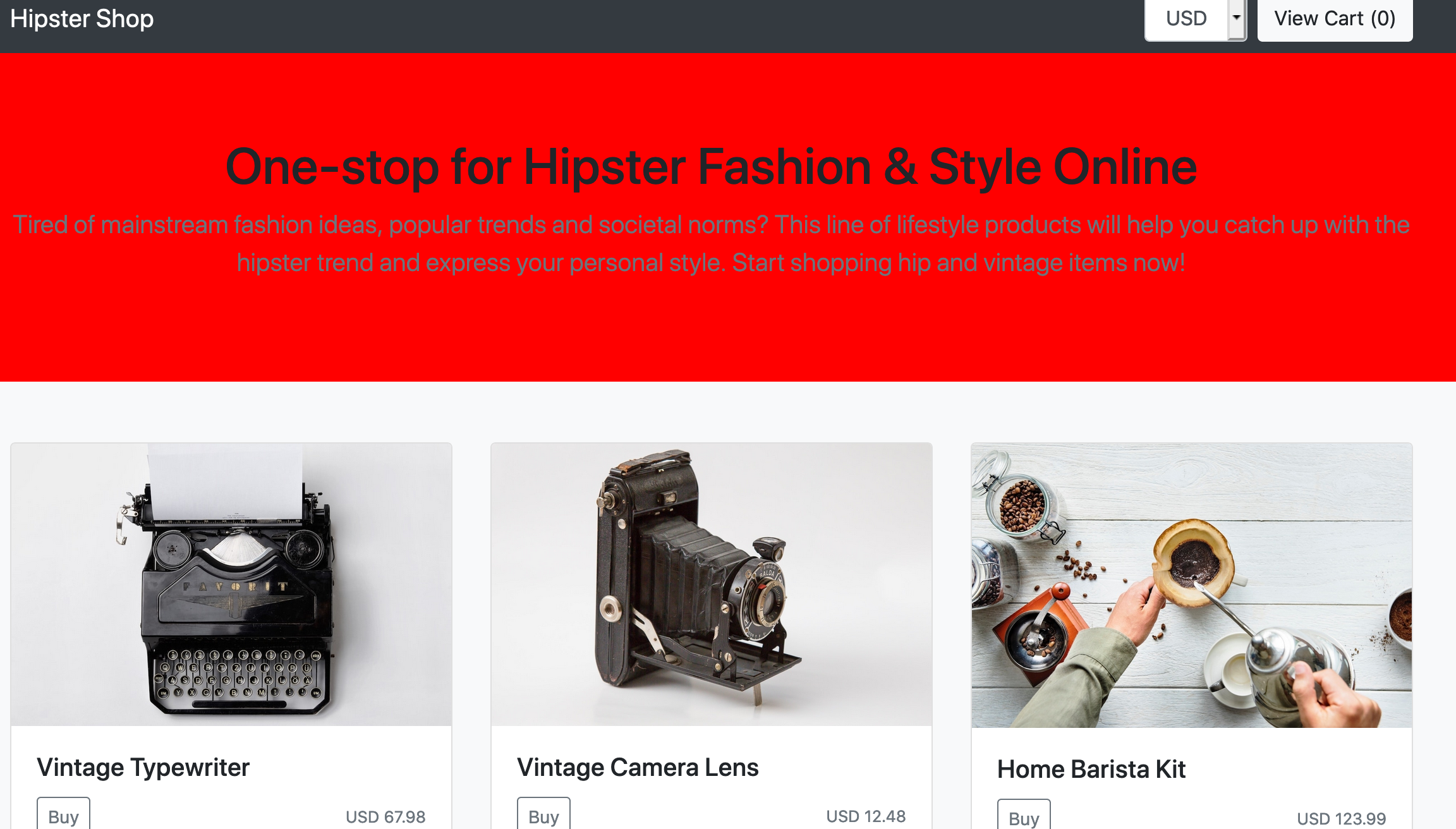

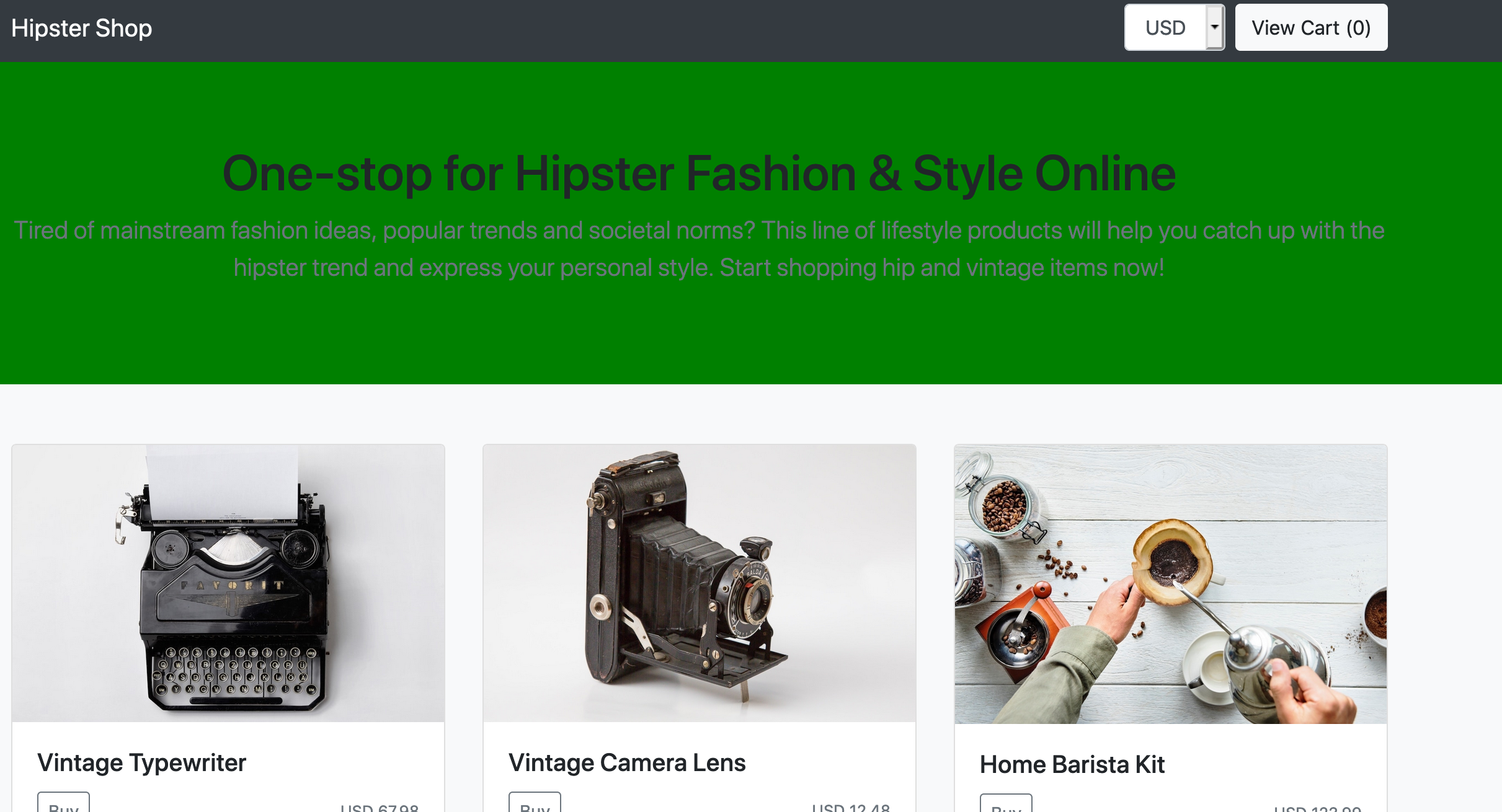

To demonstrate that, let's deploy two versions of the front end application, a V1 (default) with a red banner and a V2 with a green banner.

This is really easy thanks to our Tanka templates:

tk show tanka/environments/manual --dangerous-allow-redirect \

-e manualConfig="{

project: \"hipstershop\",

namespace: \"hipstershop\",

image+: {

repo: \"prune\",

},

loadgenerator: {

deployments: [],

},

frontend+: {

deployments: [

{name: \"frontend\", version: \"v1\", withSvc: true, localEnv:{BANNER_COLOR: \"red\"}, replica: 1, image: {}},

{name: \"frontend-v2\", version: \"v2\", withSvc: false, localEnv:{BANNER_COLOR: \"green\"}, replica: 1, image: {}},

],

},

}" \

-e selectedApps='[]' > /tmp/hipstershop.yaml

kubectl apply -f /tmp/hipstershop.yaml

You should now have two versions of the front end:

kubectl -n hipstershop get pods -l app=frontend

NAME READY STATUS RESTARTS AGE

frontend-54f888c6fd-qrcv7 2/2 Running 0 57s

frontend-v2-776f875b6c-5lkzb 2/2 Running 0 57s

If you go to your shop URL with your browser, the top banner of the website should be either RED or GREEN depending on the back-end you reach. The default behavior of a load-balanced service is round robin: each new request go to the other back-end.

We can test this using curl too:

while 1 ; do curl -ks https://hipstershop.${GATEWAY_IP}.nip.io/ \

|egrep 'red;|green;' ; sleep 1 ; done

style="background-color: green;"

style="background-color: red;"

style="background-color: green;"

style="background-color: green;"

style="background-color: green;"

style="background-color: red;"

style="background-color: green;"

style="background-color: green;"

style="background-color: red;"

style="background-color: green;"

style="background-color: green;"

style="background-color: red;"

style="background-color: red;"

style="background-color: red;"

style="background-color: red;"

The global balancing is a 50/50 chance to reach any of the front ends.

send traffic to V1 only

To be able to switch the traffic we need to create a Subset that will

differentiate the traffic on a pattern . This is done by creating a

DestinationRule. In our case, we are using the label name version as

the differentiation key:

kubectl apply -n hipstershop -f - <<EOF

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: frontend-destination

spec:

host: frontend.hipstershop.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

EOF

We can now use this rule in the VirtualService to split the traffic as we

want:

kubectl apply -n hipstershop -f - <<EOF

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: hipstershop-frontend

spec:

hosts:

- "hipstershop.${GATEWAY_IP}.nip.io"

gateways:

- hipstershop-gateway

http:

- route:

- destination:

port:

number: 8080

host: frontend

subset: v1

EOF

As you see here we are only using one destination with the v1 subset.

Using the same curl command as before, we can ensure only the V1 server is

reached. Here, the V1 server is using the red banner, so we can grep that

to limit the output of the command:

curl -ks https://hipstershop.${GATEWAY_IP}.nip.io/ |egrep 'red;|green;'

style="background-color: red;"

Shift 10% of the traffic to V2

Update the VirtualService to send 10% of the traffic to the V2 front end. To

do that we use a weight on each request : it's a value going from 0 to 100%.

The total MUST be 100:

kubectl apply -n hipstershop -f - <<EOF

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: hipstershop-frontend

spec:

hosts:

- "hipstershop.${GATEWAY_IP}.nip.io"

gateways:

- hipstershop-gateway

http:

- route:

- destination:

port:

number: 8080

host: frontend

subset: v1

weight: 90

- destination:

port:

number: 8080

host: frontend

subset: v2

weight: 10

EOF

You can use a while loop with a curl command to send a request every second

and confirm that only 10% of the requests reach the V2 front end:

while 1 ; do curl -ks https://hipstershop.${GATEWAY_IP}.nip.io/ |egrep 'red;|green;' ; sleep 1 ; done

style="background-color: red;"

style="background-color: red;"

style="background-color: red;"

style="background-color: red;"

style="background-color: red;"

style="background-color: red;"

style="background-color: green;"

style="background-color: red;"

style="background-color: red;"

style="background-color: red;"