Configuration Data Flow

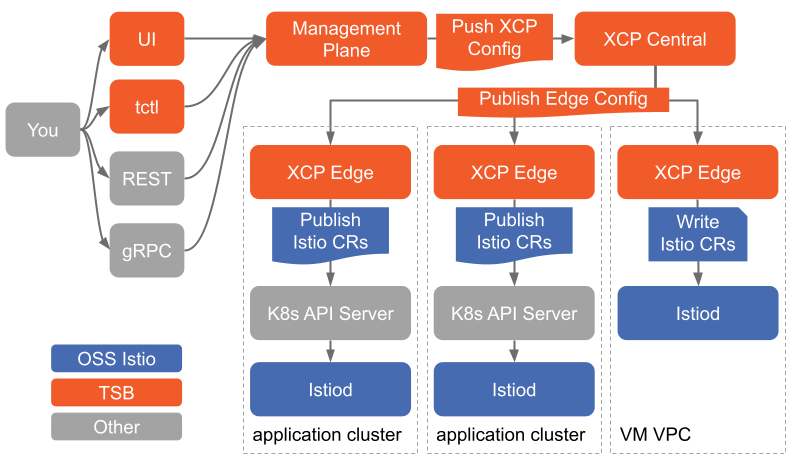

We described the layers that make up TSB in in the Architecture section: the data plane, local control planes, global control plane, and management plane. The data plane — Envoy — is managed by the local control plane — Istio and XCP Edge — and those local control planes are coordinated by the global control plane — XCP Central. Users author configuration through the management plane — TSB — and TSB pushes that configuration to XCP Central, and so on down the layers until the data planes enact that user configuration.

In this page we describe the data flow between the components that Tetrate adds — TSB's management plane, XCP Central, and XCP Edge — in detail.

Data Flow

At a high level, data flows from you (from the UI, the API, or CLI — and 'you' includes non-person entities like CI/CD systems as well as person entities) to the management plane, to the central control plane, to the local control planes. Each component along the way stores its own snapshot of the configuration, so it can continue to operate in a steady state if it loses its connection up the chain. The management plane's configuration store is the source of truth for the entire system.

Management Plane

All configuration for TSB starts at the management plane. You can interact with TSB configuration via:

The UI and tctl are both wrappers over top of TSB's gRPC APIs.

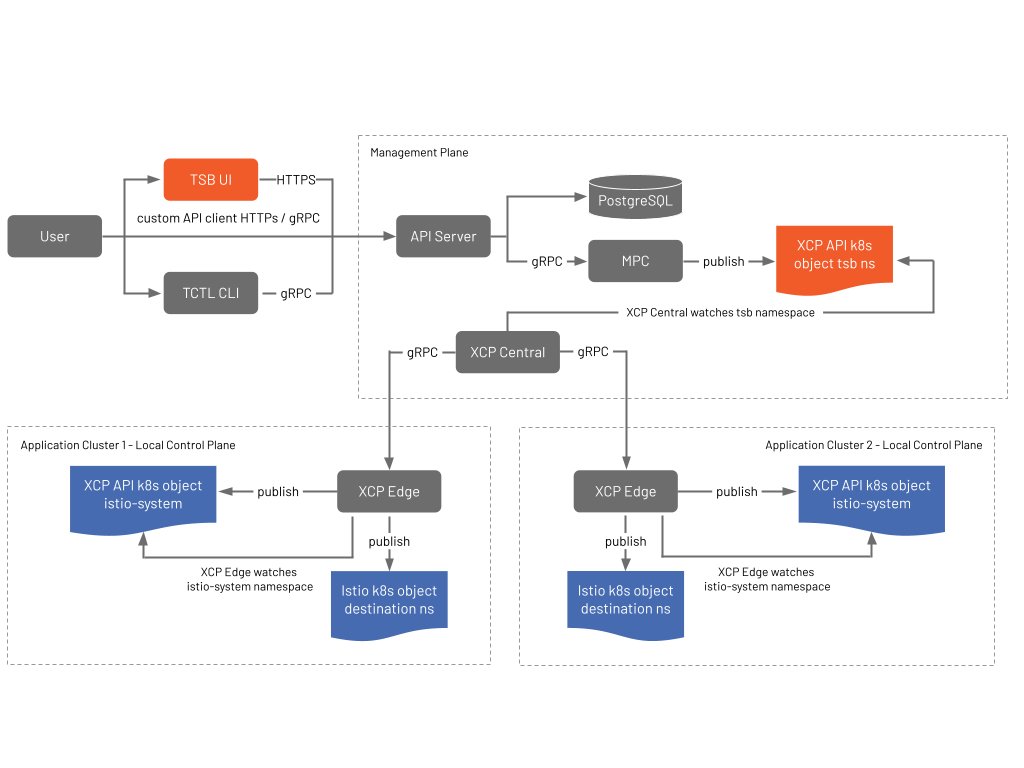

The management plane persists the configuration you push into a database — we typically use a cloud provider's SQL-aaS — as the source of truth for the system, then pushes it to XCP Central.

MPC Component

For legacy reasons XCP Central receives its configuration via Kubernetes CRDs. A shim server called "MPC" establishes a gRPC stream to TSB's API Server to receive configuration and push corresponding CRs into the Kubernetes cluster hosting XCP Central. MPC also sends a report of the runtime state of the system from XCP Central to TSB, to help users administer the mesh.

An upcoming release of TSB will remove this component, and TSB's API Server and XCP Central will communicate directly via gRPC.

The Global Control Plane - XCP Central

XCP Central acts as the broker between the management plane and the application clusters running local control planes. Potentially lots of clusters — in some TSB deployments there are hundreds of application clusters connected. XCP Central handles three broad categories of data:

- runtime configuration: the stuff application developers, platform owners, and security admins author to control the system

- service discovery information: what services are exposed in which clusters, and the entry points for all of those clusters

- information about the system to help administration: non-runtime data like what services exist in each cluster (exposed across clusters or not), namespaces, local control plane versions, config snapshot fingerprints, etc.

When a new application cluster is onboarded into TSB, what we're really doing is deploying XCP Edge (alongside Istio) and configuring it to talk to XCP Central. From there, the cluster is reported to the management plane and any configuration that applies to the new cluster flows immediately. Everything else in the cluster is shown in the management plane ready to be claimed and configured.

XCP Edge connects to XCP Central with a gRPC stream. This bi-directional connection lets XCP Edge report service discovery information and administrative metadata to XCP Central as it changes in the XCP Edge's cluster. Meanwhile, XCP Central uses the connection to push down new user configuration as XCP Central gets updates from TSB and push service discovery information from remote clusters. XCP Central uses its connection to TSB to report informational data to help with administration.

The Architecture page describes what data XCP Central pushes to XCP Edge instances at a high level. It publishes only the address of the cross-cluster gateway for each cluster (TSB maintains a gateway dedicated to cross-cluster communication in each cluster), as well as the services that are deployed in that cluster that are "published". TSB does not make all services in the cluster available across cluster out of the box, but instead requires the service to be exposed once (making it available across all clusters its deployed in).

XCP Central Data Store

Today XCP Central stores a snapshot of its local state as Kubernetes CRs in the cluster it's deployed in. This is used when XCP Central cannot connect to the Management Plane and XCP Central itself needs to restart (i.e. cannot use an in-memory cache).

When XCP Central receives its configuration directly from TSB via gRPC in a future release, XCP Central will persist its configuration in a database similar to the management plane.

The Local Control Plane - XCP Edge

XCP Edge receives configuration from XCP Central and is responsible for translating that configuration into native Istio objects that are specialized for the cluster that Edge is deployed in, and publishes them to the Kubernetes API server so Istio can consume it exactly as normal.

The configuration XCP Edge receives is not native Istio configuration, but its own set of CRDs. It publishes these into the local control plane namespace (istio-system by default) to act as a local cache, in case XCP Edge loses its connection to XCP Central. It also processes that configuration into Istio CRs (i.e. normal Istio API objects) to control mesh and gateway behavior in the local cluster.

Finally, to enable the cross-cluster communication we've described in previous concepts docs, XCP Edge publishes Istio configuration into a special namespace (xcp-multicluster by default), which manage how services are exposed across the mesh using the dedicated cross-cluster gateways that TSB deploys for this purpose.

Detailed Data Flow

At this point the configuration that was updated by the user has traveled all the way down to the Envoy data plane in the onboarded clusters. The data flow for user configuration can be summarized as follows:

- TSB API Server stores the data in its datastore

- TSB pushes configuration to XCP Central

- XCP Central pushes configuration to XCP Edge instances

- XCP Edge stores incoming objects in the control plane’s namespace (

istio-systemby default) - XCP Edge subscribes to updates in control planes namespace and publishes native Istio objects when those resources change

- Istio processes the configuration and pushes it to Envoys just like normal

Out of band of user configuration, service discovery information is collected and distributed by XCP Central across XCP Edge instances:

- XCP Edge sends updates to XCP Central about exposed Services

- XCP Central distributes this cluster state to each of the XCP Edges

- XCP Edge updates configurations in the multi-cluster namespace (

xcp-multicluster) if necessary - XCP Edge subscribes to updates in the multi-cluster namespace and publishes native Istio objects when those resources change

- Istio processes the configuration and pushes it to Envoys just like normal.

TSB and Git

There are two places where TSB fits naturally into a GitOps flow:

- Receiving configuration from a CI/CD system, which is maintaining a source of truth in git. This works today and we have many customers doing it — check out our Service Mesh and GitOps guide for an overview.

- TSB's management plane checking configuration in to git that your existing CI/CD system deploys into each cluster. It's possible to operate TSB in a similar mode yourself today — we've had deployments with application clusters across air gaps, for example — but first-class support in TSB itself is planned for a future release.